Imaging Hangar: Building the virtual animal kingdom

Automated tracking methods, data stream processing, and interactive virtual environments

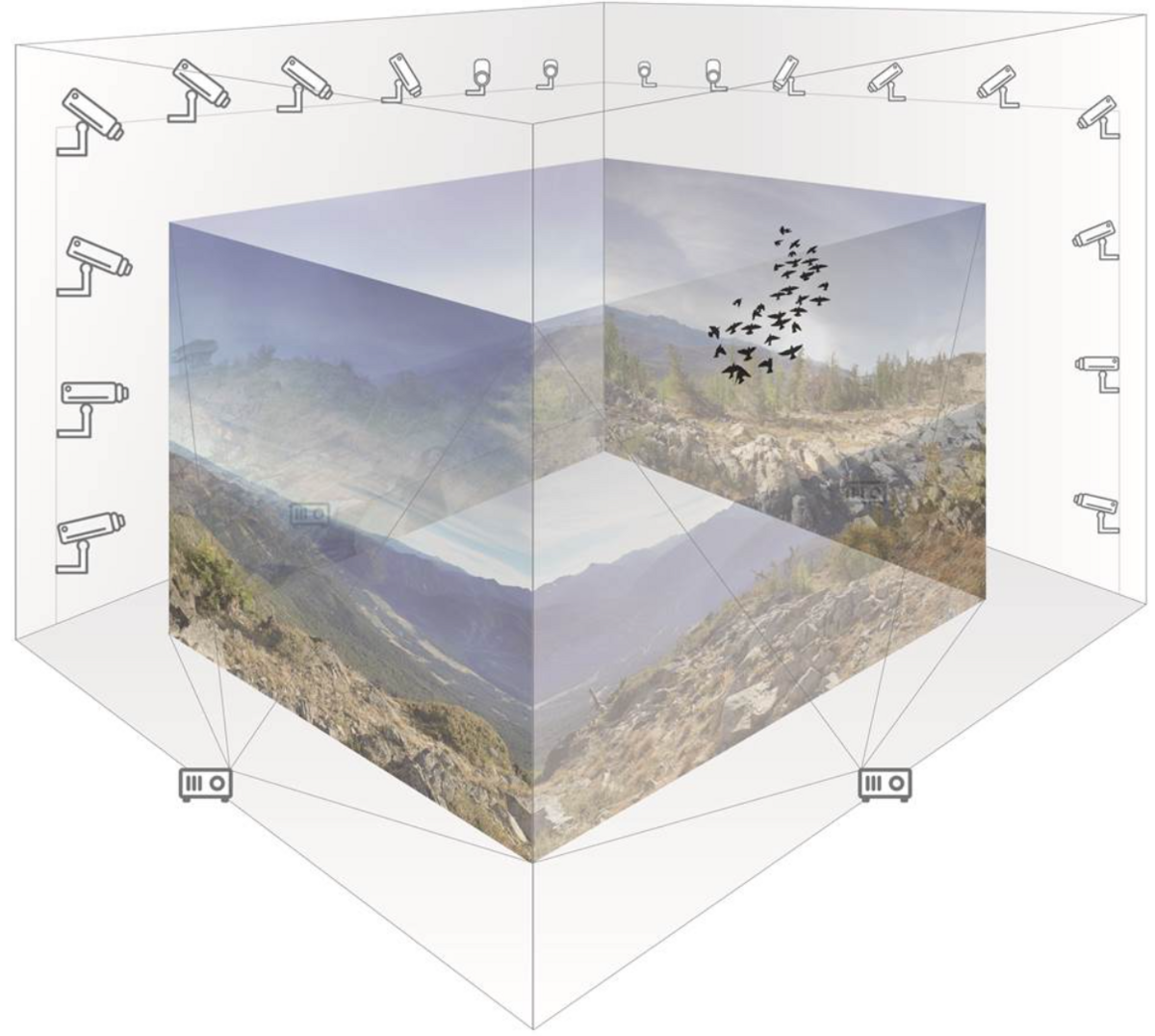

Studying collective behaviour under controlled conditions requires artificially recreating an animal’s social environment. Enter the Imaging Hangar. In this globally-unique facility, individuals and their interactions are tracked through computer vision to build a “social network” which is continuously analysed by a graph database system. Next, computer graphics adapt the virtual environment in realtime, creating a reactive, naturalistic environment for the study of collectives.

Interactive virtual environments encompass real-time tracking, behavioural analysis as well as online data processing, all of which allow us to react to the behaviour of organisms. Real-time rendering methods are needed for updating the environment. While this has been achieved for some species (flies, fish, mice) in small environments, we will extend this approach to other species such as locusts and birds within large environments. With respect to tracking, in some cases the development of data-driven, low-level predictive models for the appearance and motion of organisms can be trained from different types of sensor data (multi-view video and depth, ultrasonic localization tags etc.). Moreover, it must work on a wide range of scales and allow for a fast determination of location and posture. This will be particularly challenging when tracking a large number of individuals. Furthermore, reactive environments require real-time processing, and the data stream volume will fluctuate strongly with the number and movement of the tracked individuals. In order to detect and identify (possibly exceptionally) important events in the presence of these spikes and lulls of data, we need to manage the quality of service, for example by a controlled reduction of the accuracy of the detected events in exchange for reduced computational overhead. Since reaction time will be critical, with vast amounts of pixels to be produced for large projections, we will have to synthesize renditions with minimal effort that different animals will perceive as realistic. This requires organism-specific rendering, which is also important for a deeper understanding of the sensory systems and visual processing of different species.

Publications

- Chondrogiannis T, Bornholdt J, Bouros P, Grossniklaus M (2022) History Oblivious Route Recovery on Road Networks. The 30th International Conference on Advances in Geographic Information Systems (SIGSPATIAL ’22).

- Waldmann U, Naik H, Máté N, Kano F, Couzin I, Deussen O, Goldlücke B (2022) I-MuPPET: Interactive Multi-Pigeon Pose Estimation and Tracking. DAGM GCPR 2022: Pattern Recognition. Lecture Notes in Computer Science.

- Waldmann U, Bamberger J, Johannsen O, Deussen O, Goldlücke B (2022) Improving Unsupervised Label Propagation for Pose Tracking and Video Object Segmentation. DAGM GCPR 2022: Pattern Recognition. Lecture Notes in Computer Science.

- Giebenhain S, Waldmann U, Johannsen O, Goldluecke B, (2023). Neural Puppeteer: Keypoint-Based Neural Rendering of Dynamic Shapes. In: Wang,L, (eds) Computer Vision – ACCV 2022. ACCV 2022 in Computer Science book series (LNCS,volume 13844). Springer, Cham.